Recently at work I trained a neural network on a supercomputer that took just 3.9 minutes to learn to beat Atari Pong from pixels.

Several people have asked for a step-by-step tutorial on this and one of those is on the way. But before that I wanted to write something else: I wanted to write about everything that didn’t work out along the way.

Most of the posts and papers I read about deep learning make their author look like an inspired genius, knowing exactly how to interpret the results and move forward to their inevitable success. I can’t rule out that everyone else in this field actually is an inspired genius! But my experience was anything other than smooth sailing and I’d love to share what trying to achieve even result as modest as this one was actually like.

The step-by-step tutorial will follow when I get back from holiday and can tidy up the source and stick it on Github for your pleasure.

Part One: Optimism and Repositories

It begins as all good things do: with a day full of optimism. The sun is bright, the sea breeze ruffles my hair playfully and the world is full of sweetness and so forth. I’ve just read DeepMind’s superb A3C paper and am full of optimism that I can take their work (which produces better reinforcement learning results by using multiple concurrent workers) and run it at supercomputer scales.

A quick search shows a satisfying range of projects that have implemented this work in various deep learning frameworks - from Torch to TensorFlow to Keras.

My plan: download one, run it using multiple threads on one machine, try it on some special machines with hundreds of cores, then parallelize it to use multiple machines. Simple!

Part Two: I Don’t Know Why It Doesn’t Work

A monk once told me that each of us is broken in their own special way - we are all beautiful yet flawed and finding others who accept us as we are is the greatest joy in this life. Well, GitHub projects are just like that.

Every single one I tried was broken in its own special way.

That’s not entirely fair. They were perhaps fine, but for my purposes unpredictably and frequently frustratingly unsuitable. Also sometimes just broken. Perhaps some examples will show you what I mean!

My favourite implementation was undoubtedly Kaixhin’s based on Torch. This one actually reimplements specific papers with hyperparameters provided by the authors! That level of attention to detail is both impressive and necessary, as we shall see later.

Getting this and an optimized Torch up and running was blessedly straightforward. When it came to running it on more cores I went to one of our Xeon Phi KNL machines with over 200 cores. Surely this would be perfect, I thought!

Single thread performance was abysmal, but after installing Intel’s optimized Torch and Numpy distributions I figured that was as good as I would get and started trying to scale up. This worked well up to a point. That point was when storing the arrays pushed Lua above 1GB memory.

Apparently on 64-bit machines Lua has a 1 GB memory limit. I’m not sure why anyone things this is an acceptable state of affairs but the workarounds did not seem like a fruitful avenue to pursue versus trying another implementation.

I found a TensorFlow implementation that already allowed you to run multiple distributed TensorFlow instances! Has someone solved this already, I thought? Oh, sweet summer’s child, how little I knew of the joys that awaited me.

The existing multi-GPU implementation blocked an entire GPU for the master instance apparently unnecessarily (I was able to eliminate this by making CUDA devices invisible to the master). TensorFlow itself would try to use all the cores simultaneously, competing with any other instances on the same physical node. Limiting inter- and intra-op parallelism seemed to have no effect on this. Incidentally, profiling showed TensorFlow spending a huge amount of time copying, serializing and deserializing data for transfer. This didn’t seem like a great start either.

I found another A3C implementation that ran in Keras/Theano which didn’t have these issues and left it running at the default settings for 24 hours.

It didn’t learn a damn thing.

This is the most perplexing part of training neural networks - there are so few tools to gain insight as to why a network fails to converge on even a poor solution. It could be any of:

* Hyperparameters need to be more carefully tuned (algorithms can be rather sensitive even within similar domains)

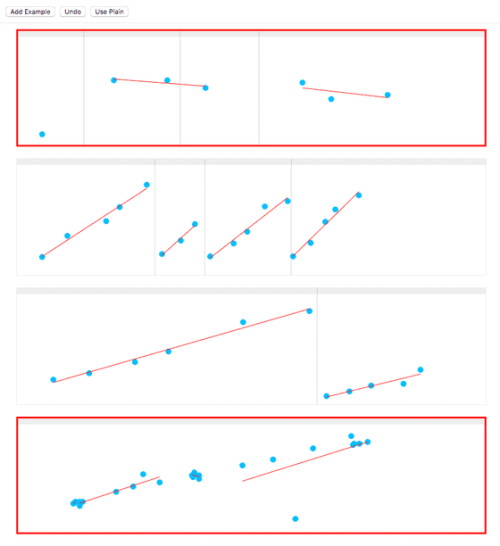

* Initialization is incorrect and weights are dropping to zero (vanishing gradient problem) or are becoming unstable (exploding gradient problem) - these at least you can check by producing images of the weights and staring hard at them, like astrologers seeking meaning in the stars.

* The input is not preprocessed, normalized or augmented enough. Or it’s too much of one of those things.

* The problem you’re trying to solve simply isn’t amenable to training by gradient descent.

Honestly at the moment training a neural network to do something even slightly novel feels like rather like feeding a stack of punch cards to a mechanical behemoth from the twentieth century and waiting several hours to see whether or not it goes boink.

Part Three: Rules of Thumb

At times I felt like a very poor reinforcement learning algorithm randomly casting about in the hope of getting some kind of reward at all and paying no attention to the gradient. After realizing the irony of this position I became a little more systematic. If you face the same situation, these rules of thumb might help you too:

- Set some expectations for what success or failure will look like that you can test rapidly. If the papers show some learning after 100k steps then run to 100k steps and check your network has made progress. The shorter this cycle the better, for obvious reasons. Remember: staring at a stream of fluctuating error rates and willing them to decrease is the first step towards madness…

- Every failure is an opportunity to learn. Ask why these hyperparameters or this network architecture or dataset did not show convergence. How could you disprove that theory? This can be slow, painstaking work sometimes, but I learned a lot. It really, really helps to do this on a network that you already know can work at least once. Play with all the settings and find the points at which it does not and see what those failure modes look like.

- Start with a very simple, direct model and get it to show some level of learning, however slight. Then build up gradually from there. This is the single piece of most important advice I ever received.

- Be prepared to revisit papers and lecture notes as frequently as you need to to make sure you have a decent mathematical intuition of what is happening in your network and why it might (or might not) converge. It’s not black magic and understanding the principles can make a big difference to your approach.

Part Four: Back to Basics

Following this advice led me to Karpathy’s wonderful 130-line python+numpy policy gradient example. This was the first time I ran a piece of code that actually showed learning right off the bat across a range of systems. You can follow what happened next in my more detailed blog posts about if you haven’t already.

The TL;DR is that I added MPI parallelization and scaled it up on a local machine, then in the cloud, then on a supercomputer. At times it looked like it wouldn’t scale well but this was often because of incidental details I was able to overcome rather than hitting fundamental scaling limits.

Part Five: I Don’t Know Why It Does Work

In the blog I refer briefly to parallel policy gradients working so well because it reduces the variance of the score function estimate. This is worked into the text in an offhand, casual manner designed to make me look like an inspired genius.

What actually happened when the first parallel implementations started converging within hours instead of days is that I was somewhat shocked. My previous experience with using data-level parallelism (in which you split the learning batch across multiple machines and train them all concurrently) had taught me that you quickly reach the point of diminishing returns by adding more parallel learners.

The problem I’d seen in supervised learning was that by doing so you’re increasing the batch size, and extremely large batches don’t converge as quickly as small ones. The literature suggests you can compensate by increasing the learning rate to a certain extent, but I didn’t know of anyone using batch sizes larger than a couple of thousand items.

In this case the effective batch size was already thousands of frame/action pairs on a single process. I didn’t expect to get a lot of mileage out of increasing that by several orders of magnitude and when I did I rather wondered why.

It was only after revisiting the policy gradient theorem that it became clear - each reward received is taken as an unbiased random sample of the expected score function. As long as this sampling is unbiased, the policy gradient method will eventually converge, but such random sampling is extremely noisy and has a very high variance. Most of the more elaborate policy learning methods attempt to minimize this in a variety of ways but simply taking lots and lots of samples is a very scalable and simple way to directly reduce the variance of the estimate too.

In fact by running several thousand games per batch the model only requires 70 weight updates to go from completely random behaviour to beating Atari Pong from pixels. I confess to a certain curiosity as to how low this can go. Pong is not a deep or complex game. Learning a winning strategy in a single weight update would be kinda neat!

Part Six: Finally Something I Can Do

Having seen that the approach was working, actually optimizing this and running it at extreme scale was the only straightforward part of this entire process. This is something I know how to do and something there are very good tools to measure and improve parallel performance. I rather suspect that scalar deep learning will need a similarly large investment in tools surrounding model correctness and debugging before it becomes widely accessible.

Part Seven: Is It Just Me?

So that’s the background to the story - dozens of dead ends, desperate and frustrated rereading of source code and papers to discover sigmoid activation functions paired with the mean squared error costs, single frames passed as input instead of a sequence or difference frame and all manner of other sins before finally managing to get something working.

I’d love to hear from others who have tried and failed or succeeded. Did your story mirror mine with similar amounts of desperation, persistence, surprise and luck? Have you found a sound method for exploring new use cases that I should be using and sharing?

Deep learning is the wild west right now - stories of exciting progress all around but very little hard and fast support to help you on your way. This short yet honest making-of is my attempt to change that.

Happy training!