Professional Documents

Culture Documents

A New Method of Image Fusion Based On Redundant Wavelet

Uploaded by

vahdat_kazemiOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

A New Method of Image Fusion Based On Redundant Wavelet

Uploaded by

vahdat_kazemiCopyright:

Available Formats

A New Method of Image Fusion Based on Redundant Wavelet

Transform

LI Xu*

, Michel ROUX

, HE Mingyi*, Francis SCHMITT

*School of Electronics and Information, Northwestern Polytechnical University, Xian, 710072, P. R. China

Department of Signal and Image Processing, TELECOM ParisTech, Paris, 75013, France

nwpu_lixu@126.com, myhe@nwpu.edu.cn

Keywords: image fusion, redundant wavelet transform, edge

enhanced detail, consistency verification.

Abstract

The goal of image fusion is to combine information from

multiple images of the same scene. The result of image fusion

is a new image which is more suitable for human and

machine perception or further image-processing task such as

segmentation, feature extraction, object detection and target

recognition. In this paper we present a new fusion algorithm

based on the redundant wavelet transform (RWT). The two

source images are firstly decomposed using the RWT, which

is shift-invariant. The wavelet coefficients from the

approximation plane and wavelet planes are then combined

together, and the fused image is reconstructed by the inverse

RWT. To evaluate the performance of image fusion results,

we investigate both subjective and objective evaluation

measures. The experimental results show that the new

algorithm outperforms the other conventional methods.

1 Introduction

Image fusion is widely used in many fields such as remote

sensing, medical imaging, machine vision and military

applications. The purpose of image fusion is to produce a

single image from a set of input images. The fused image is

then more useful for human or machine perception with more

complete information. The simplest fusion algorithm consists

in taking average of the input images, pixel by pixel, to create

the new image. However, this method is not appropriate since

it creates a blurred image where the details are rather reduced.

For this reason, various methods have been developed to

perform better image fusion. A proper algorithm for image

fusion must ensure that all the important visual information

found in input images is transferred into the fused image

without the introduction of any artifacts or inconsistencies,

and also should be reliable and robust to imperfection such as

mis-registration. The standard image fusion techniques, such

as those that use Laplacian pyramid transform [14], intensity-

hue-saturation (IHS) [7, 18], principal component analysis

(PCA) [9, 20], and Brovey transforms [3], often lead to poor

results, in consistent with the ideal output of the fusion.

Alternative pyramid decomposition methods for image fusion,

such as the ratio-of-low-pass pyramid (ROLP) and the

gradient pyramid (GP), are also available. The ROLP pyramid,

which was introduced by Toet [2], is very similar to a

Laplacian pyramid. Instead of using the difference of the

successive Gaussian pyramid levels to form the Laplacian

pyramid, the ratio of the successive low-pass filter images is

generated to form the so called ROLP pyramid. A GP for an

image can be obtained by applying a gradient operator to each

level of the Gaussian pyramid representation. The image can

be completely represented by a set of four such GPs, which

represent horizontal, vertical, and the two diagonal directions

respectively [15]. More recently, the discrete wavelet

transform (DWT) has been popularly used by many authors

[8, 13, 1, 11] to improve the quality of the fused image. For

example, Li et al. [8] proposed a fusion rule based on

maximum absolute value selection with consistency

verification. Santos et al. [11] developed different gradient

based methods with fusion merging purposes. However,

because of an underlying down-sampling process in DWT, its

multiresolution decompositions and consequently the fusion

results are shift variant. This is particularly undesirable when

the source images are with noise or cannot be perfectly

registered.

In this paper, a novel image fusion algorithm based on the

RWT is provided. In both the subjective and objective

evaluation, experimental results show that the new algorithm

improves the quality of the fused image, compared with other

conventional approaches.

The remainder of this paper is organized as follows. Section 2

gives a brief review on the fundamental theory of the

redundant wavelet transform. The proposed fusion method

based on trous algorithm is described in Section 3. In

Section 4, experimental results are reported to demonstrate

the performance of the proposed method. This paper closes

with concluding remarks in Section 5.

2 Redundant wavelet decomposition

When one is concerned with an analysis problem, redundancy

of information is always helpful. This fact remains true for

image fusion since any fusion rule essentially reduces to a

problem of analyzing the images to fuse and then select the

dominant features that are important in a particular sense. The

popular Mallats algorithm [17] uses an orthonormal basis,

but the transform is not shift-invariant, which can be a

problem in signal analysis, pattern recognition or, as in our

case, image fusion. A redundant representation, which avoids

decimation, has the same number of wavelet coefficients at all

levels. This fundamental property can help to develop fusion

procedures based on the following intuitive idea: when a

c 2008 The Institution of Engineering and Technology

Printed and published by the IET,

Michael Faraday House, Six Hills Way, Stevenage, Herts SG1 2AY

12 VIE 08

dominant or significant feature appears at a given level, it

should appear at successive levels as well. In contrast, a non-

significant feature such as the noise does not appear in

successive levels. It thus shows that a dominant feature is tied

to its presence or duplication at successive levels. The

discrete implementation of the RWD can be accomplished by

using the trous (with holes) algorithm, which presents

interesting properties as [10, 5]:

y One can follow the evolution of the wavelet

decomposition from level to level.

y The algorithm produces a single wavelet coefficient

plane at each level of decomposition.

y The wavelet coefficients are computed for each

location allowing a better detection of a dominant

feature.

y The algorithm is easily implemented.

We use trous algorithm, which can preserve the edges of

image better, to decompose the image into wavelet planes.

Given an image I , we construct the sequence of

approximations as:

| |

| |

| |

1

1 2

1

=

=

=

J J

A F A

A F A

I F A

(1)

where F is a low-pass filter, which here has been

implemented by a convolution with a mask [12, 19]: 5 5

(

(

(

(

(

(

1 4 6 4 1

4 16 24 16 4

6 24 36 24 6

4 16 24 16 4

1 4 6 4 1

256

1

(2)

The wavelet planes are computed as the differences between

two consecutive approximations and . Letting

1 j

A

j

A

J j A A d

j j j

,..., 2 , 1 ;

1

= =

(3)

where is wavelet coefficient of two-dimension (i.e. wavelet

plane) at resolution level . Given , we can write the

reconstruction formula

j

d

j I A =

0

J

J

j

j

A d I + =

_

=1

(4)

In this representation, the images ( ) are

versions of the original image

j

A J j , , 1 , 0 =

I at increasing scales

(decreasing resolution levels), ( ) are the

multiresolution wavelet planes, and is a residual image.

The coefficients of each wavelet plane swing around the zero

and its mean value is about zero. The dominant features in the

original image are described by the bigger absolute value of

wavelet coefficients in the wavelet plane. It is easy to find the

corresponding relationship in space-frequency between the

original image and wavelet plane in that all wavelet planes in

the trous algorithm have the same number of pixels as the

original image.

j

d J j , , 1 , 0 =

J

A

3 A new algorithm of image fusion

3.1 Fusion scheme

The overview schematic diagram of our fusion scheme is

shown in Figure 1. We take it as a prerequisite that the input

images must be registered, so that the corresponding pixels

are aligned. The input images X and Y are decomposed by

using the trous algorithm, allowing the representation of

each image by a set of wavelet coefficient planes. Then, the

decision map is generated using a selection rule discussed in

subsection 3.3. Each pixel of the decision map denotes which

image best describes this pixel. Based on the decision map,

we fuse the two images in the wavelet transform domain.

Each wavelet coefficient for the fused image is set to the

wavelet coefficient for one of the source images. The source

image used is determined from the decision map. The final

fused image Z is obtained by taking the inverse redundant

wavelet transform (IRWT).

( ) X d

1

( ) X d

2

( ) X d

J

( ) Y d

1

( ) Y d

2

( ) Y d

J

( ) X A

J

( ) Y A

J

( ) Z d

1

( ) Z d

2

( ) Z d

J

( ) Z A

J

Figure 1: Fusion scheme using the RWT.

3.2 Edge enhanced detail

In the proposed fusion algorithm, the two source images are

firstly decomposed by trous wavelet algorithm. Because

there are not upsampling and downsampling with trous

algorithm, more information can be obtained for fusion,

avoiding losing some important information in source images.

From the wavelet planes we can extract features. The

experimental results show that the sum of the wavelet planes

from each image has already presented the most edge

information. The output of this adding process is an edge

image which we call Edge Enhanced Detail (EED).

According to Equation (4), the EED of image I can be

expressed as:

J

J

j

j

A I d EED = =

_

=1

(5)

Figure 2 shows an example in which we decompose the test

image to three levels. Figure 2(a) is the test image and Figures

2(b), 2(c), and 2(d) show the wavelet planes of the three

decomposition levels respectively. Figure 2(e) is the

approximation of the test image. Figure 2(f) is the EED of the

test image in which we can find the edges of the dominant

features more clearly.

13

Figure 2: Test image and its EED. (a) Test image. (b) level1

decomposition . (c) level2 decomposition . (d) level3

decomposition . (e) the residue image . (f) the EED of

the Test image.

1

d

2

d

3

d

3

A

3.3 Fusion rules

The quality of the fusion is tied to the particular choice of an

appropriate fusion rule. More generally, this rule is defined as

a comparative criterion to apply between the multiresolution

decomposition coefficients of input images.

The method proposed by Toet [2] is a multiresolution

technique that uses the maximum contrast information in the

ROLP pyramids to determine what features are salient in the

images to be fused. Burt and Kolczynski [15] use a match and

saliency measure, which is based upon the weighted energy in

the detail domain, to decide how the oriented gradient

pyramid will be combined. Li et al. [8] considers the

maximum absolute value within a window as the activity

measure associated with the sample centered in the window.

The maximum selection rule is used to determine which of

the inputs is likely to contain the most useful information.

Santos et al. [11] presented new methods based on the

computation of local and global gradient which takes into

account the grey level difference from point to area in the

decomposed subimages.

In the new approach, we first produce the EED of the two

source images by using trous algorithm. Then we

compare the two source images EED at each location using

the Laplacian operator because the Laplacian concept exploits

the relevant information derived from the grey level variation

in the images, particularly around the edges. At each

location , the Laplacian operator is computed as follows, p L

( ) ( ) ( )

_

=

e

=

p q

R q

EED

p EED q EED p L ) ( (5)

(a) (b)

where 3 3 = R is the local area surrounding and is a

location in R . Considering more information, we compute

the average value in a region:

p q

( ) ( )

_

e

=

W q

EED

W

q L

n

p LA

1

(6)

where is a region of size , are the coefficients

belonging toW and

W n m q

n m n

W

= is the number of coefficients in

. In this paper the region has the size W 3 3 around ,

hence

p

9 =

W

n .

(c) (d)

The significant central coefficient is then selected in the fused

image Z through the following rule:

If ( ) ( ) p LA p LA

Y X

>

then

( )

( )

J j

p A p A

p d p d

X J Z J

X j Z j

,..., 2 , 1

) (

) (

, ,

, ,

=

=

=

,

otherwise

( )

( )

J j

p A p A

p d p d

Y J Z J

Y j Z j

,..., 2 , 1

) (

) (

, ,

, ,

=

=

=

.

(e) (f)

In our implementation we take the maximum absolute value

of the average as an activity measure. In this way a high

activity indicates the presence of a dominant feature in the

local area. The decision map is then produced with the same

size as the EED, which is also the same size as the source

image. Each pixel in the decision map corresponds to a set of

wavelet coefficients of all decomposition levels. Once the

decision map is determined, the mapping is determined for all

the wavelet coefficients. In this way, we can ensure that all

the corresponding samples are fused the same way and

significant image features tend to be stable with respect to

variations in scale. These features tend to have a nonzero

lifetime in scale space [21]. Thus a binary decision map is

created to record the selection results based on the maximum

selection rule. This map is subject to consistency verification

(CV). Li et al. [8] applied CV using a majority filter.

Specifically if the centre composite coefficient comes from

image while the majority of the surrounding coefficients

come from image , the centre sample is then changed to

come from image .

X

Y

Y

The steps of the new proposed method are illustrated in

Figure 3.

(1) Decompose the source images X and Y by trous

algorithm at resolution level 5;

(2) Extract features from the wavelet planes to form the

EED;

14

(3) Compare the activity between the two edge images

and apply the selection rule to reconstruct the

composite wavelet coefficients;

(4) Perform the IRWT to obtain the fused image.

Figure 3: Schematic diagram for the image fusion.

4 Experimental results

The proposed approach has been tested on various image sets.

Here, we use one of them to demonstrate the performance of

the proposed method. Similar results can be observed for

other images.

As shown in Figure 4, (a) and (b) are a pair of multi-focus test

images. In one image, the focus is on the left. In the other

image, the focus is on the right. According to Equation (6),

the results calculated from the EED of Figures 4(a) and 4(b)

are displayed in Figures 4(c) and 4(d) respectively.

Afterwards, we use the distinct features or activities with

different focuses shown in Figures 4(c) and 4(d) as

benchmarks to which the selection rule is applied. Then a

binary decision map is produced corresponding to the

outcome of the proposed selection rule. Figure 4(e) is the

binary decision map which displays how the new wavelet

coefficients are generated from the two input sources. The

bright pixels indicate that coefficients from the image in 4(a)

are selected, while the black pixels indicate that coefficients

from the image in 4(b) are selected. Finally the fused image

obtained by our method is shown in Figure 4(f).

The test images, Figures 4(a) and 4(b), are also fused with the

other four different methods to be compared with. Figures

5(a), 5(b), 5(c) and 5(d) are the fused results using the

methods of reference [2], [15], [8] and [11] respectively.

(a) (b)

(c) (d)

Figure 4: Original images and fused images with different

method. (a) focus on the right. (b) focus on the left. (c) the

result from (a) according to Eq. (6). (d) the result from (b)

according to Eq. (6). (e) the binary decision map. (f) the fused

image.

(e) (f)

We can find from Figure 5(a) that more blurring around the

edges of objects appear and Figure 5(b) is not smooth enough

which appears less local continuity, while the other three

methods including ours have a better visual effect. Also we

notice that Figures 5(c) and 5(d) have some artifacts around

the clocks edge probably generated by the shift variance of

the DWT method which could enlarge a small spatial

misalignment between the source images. However, our

method provides some improvement and performs best,

producing image with smooth edge and without obvious

artifacts. So the proposed fusion method is more effective in

image fusion and the fused image is more suitable for human

visual or machine perception.

In addition to evaluate the performance under the visual

judgment or a subjective criterion, objective performance

evaluations were also conducted using the following indexes:

Image Definition (ID)

Image definition means grads, which can reflect image tiny

detailed difference and texture change. For an

15

N M image I , with the gray value at pixel position ( ) j i,

denoted by , its ID is defined as [4]: ( j i I , )

__

= =

(

(

|

|

.

|

\

|

c

c

+ |

.

|

\

|

c

c

=

M

i

N

j

y

j i I

x

j i I

MN

ID

1 1

2 1

2

2

) , ( ) , ( 1

(7)

where ( ) x j i I c c , and ( ) y j i I c c , are one-order differential of

pixel ( in ) j i, x and direction respectively. The larger the ID

is, the more detailed information and more localized features

are obtained in the fused result.

y (a)

Mutual Information (MI)

MI is a basic concept from information theory, measuring

the statistical dependence between two random variables or

the amount of information that one variable contains about

the other. Mutual information is given by [16]:

XY

I

( ) ( )

( )

( ) ( )

__

= =

=

255

0

255

0

,

,

,

log , ,

i j Y X

Y X

Y X XY

j p i p

j i p

j i p j i I (8)

where and are the probability density function in the

individual images and is the joint probability density

function. According to Equation (8), if we note

X

p

Y

p

Y X

p

,

X , Y and Z as

the source images and fused image respectively, image fusion

performance is measured by the size of

YZ XZ

I I MI + = (9)

where a larger measure implies better image quality.

Correlation Coefficient (CC)

CC is often used as a standard measure to evaluate the

spectral resemblance between the new and initial images [6]

and it is described as:

( ) ( ) ( ) ( ) |

( ) ( ) | | ( ) ( ) |

|

|

_ _

_

=

j i

g

j i

f

j i

g f

j i g j i f

j i g j i f

g f CC

,

2

,

2

,

, ,

, ,

) , (

(10)

where and state for fusion image and source

image respectively,

( j i f , ) ( ) j i g ,

f

and

g

state for the mean value of the

corresponding data set.

CC

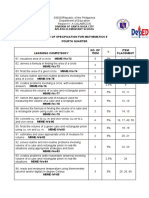

Method ID MI

Fig4(a) Fig4(b)

Ref [2] 3.66 1.81 0.9446 0.9661

Ref [15] 5.74 0.99 0.8532 0.8785

Ref [8] 5.81 2.16 0.9375 0.9862

Ref [11] 5.80 2.18 0.9374 0.9863

Ours 5.83 2.46 0.9478 0.9905

Table 1: Evaluation of five fusion algorithms

The statistical results of the five different fusion methods are

shown in Table 1. Comparing and analyzing the performance

statistical values of different fusion results in Table 1, we find

that the ID, MI and CC by using the proposed method have

been improved compared to the other methods. By combining

the visual inspection results and the quantitative results, it can

be concluded that the proposed fusion method is more

effective.

Figure 5: The fused results with the different methods. (a) Ref

[2] method. (b) Ref [15] method. (c) Ref [8] method. (d) Ref

[11] method.

(b)

(c) (d)

5 Conclusions

In this paper we have presented a new fusion method based

on the redundant wavelet transform which combines the

traditional pixel-level fusion with some aspects of feature-

level fusion. We employ feature extraction separately on each

image and then perform the fusion based on the feature of

edge information representing salience or activity, to guide

the fusion process. Since edges of objects and parts of objects

carry information of interests, it is reasonable to focus them in

the fusion algorithm. The visual and statistical analyses of the

different fusion results prove that the proposed method

reaches our expectation to improve the fusion quality. And

the experiment results demonstrate that this method

outperforms the other mentioned fusion approaches by

providing better objective and subjective results.

Moreover, a lot of experiments indicate that the proposed

approach has a good generality which works well in cases

when the images either come from the same or different types

of sensors, and extensions to more than two images can be

developed in a similar way.

References

[1] A. Krista, Yun Zhang, and Peter Dare. Wavelet based

image fusion techniques-An introduction, review and

comparison, ISPRS Journal of Photogrammetry &

Remote Sensing, vol. 62, pp. 249263, (2007).

[2] A. Toet. Image fusion by a ratio of low-pass pyramid,

Pattern Recognition Letters, vol. 9, pp. 245253, (1989).

[3] A. R. Gillespie, A. B. Kahle, and R. E. Walker. Color

enhancement of highly correlated images II. Channel

ratio and chromaticity transformation technique, Remote

Sensing of Environment, vol. 22, no. 3, pp. 343365,

(1987).

[4] C. Yanmei, Rongchun Zhao, and Jinchang Ren. Self-

adaptive image fusion based on multi-resolution

16

decomposition using wavelet packet analysis, IEEE

Proceedings of the 3rd International Conference on

Machine Learning and Cybernetics, vol. 7, pp. 4049

4053, (2004).

[5] C. Youcef, and Amrane Houacine. Redundant versus

orthogonal wavelet decomposition for multisensor image

fusion, Pattern Recognition, vol. 36, pp. 879887,

(2003).

[6] C. Youcef. Additive integration of SAR features into

multispectral SPOT images by means of the trous

wavelet decomposition, ISPRS Journal of

Photogrammetry & Remote Sensing, vol. 60, pp. 306-

314, (2006).

[7] C. Pohl, and J. L. van Genderen. Multisensor image

fusion in remote sensing: Concepts, methods and

applications, International Journal of Remote Sensing,

vol. 19, pp. 823854, (1998).

[8] H. Li, B. S. K. Rogers, and L. R. Meyers. Multisensor

image fusion using the wavelet transform, Graphical

Models and Image Processing, vol. 57, no. 3, pp. 235

245, (1995).

[9] M. Ehlers. Multisensor image fusion techniques in

remote sensing, ISPRS Journal of Photogrammetry &

Remote Sensing, vol. 51, pp. 311316, (1991).

[10] M. J. Shensa. The discrete wavelet transform: wedding

the a trous and Mallat algorithms, IEEE Transactions on

Signal Process, vol. 40, no. 10, pp. 24642482, (1992).

[11] M. Santos, G. Pajares, M. Portela, and J. M de la Cruz.

A New Wavelets Image Fusion Strategy, Lecture Notes

in Computer Science, vol. 2652, pp. 919926, (2003).

[12] N. Jorge, Xavier Otazu, Octavi Fors, Albert Prades,

Vicenc Pala, and Roman Arbiol. Multiresolution-based

image fusion with additive wavelet decomposition,

IEEE Transactions on Geoscience and Remote Sensing,

vol. 37, no. 3, pp. 12041211, (1999).

[13] P. Gonzalo, and Jesus Manuel de la Cruz. A wavelet-

based image fusion tutorial, Pattern Recognition, vol.

37, pp. 18551872, (2004).

[14] P. J. Burt, and Edward H. Adelson. The Laplacian

pyramid as a compact image code, IEEE Transactions

on Communications, vol. 31, no. 4, pp. 532540, (1983).

[15] P. J. Burt, and R. J. Kolczynski. Enhanceed image

capture through fusion, IEEE Conference of Computer

Vison, vol. 4, pp. 173182, (1993).

[16] Q. Guihong, Dali Zhang, and Pingfan Yan. Medical

image fusion by wavelet transform modulus maxima,

Optics Express, vol. 9, no. 4, pp. 184190, (2001).

[17] Stephane G. Mallat. A theory for multiresolution signal

decomposition: the wavelet representation, IEEE

Transactions on Pattern Analysis and Machine

Intelligence, vol. 11, no. 7, pp. 674693, (1989).

[18] T. Teming, Shunchi Su, Hsuenchyun Shyu, and Ping S.

Huang. A new look at HIS-like image fusion methods,

Information Fusion, vol. 2, pp. 177186, (2001).

[19] U. Michael. Texture classification and segmentation

using wavelet frames, IEEE Transactions on Image

Processing, vol. 4, no. 11, pp. 15491560, (1995).

[20] V. K. Shettigara. A generalized component substitution

technique for spatial enhancement of multispectral

images using a higher resolution data set,

Photogrammetric Engineering and Remote Sensing, vol.

58, pp. 561567, (1992).

[21] Z. Zhong, and Rick S. Blum. A categorization of

multiscale-decomposition-based image fusion schemes

with a performance study for a digital camera

application, Proceedings of IEEE, vol. 87, no. 8, pp.

13151326, (1999).

17

You might also like

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- Ghovanloo Wideband FSK Wireless Link TCAS04 PDFDocument10 pagesGhovanloo Wideband FSK Wireless Link TCAS04 PDFvahdat_kazemiNo ratings yet

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (399)

- Ghovanloo Atluri Multi-Carrier TCASI07 PDFDocument11 pagesGhovanloo Atluri Multi-Carrier TCASI07 PDFvahdat_kazemiNo ratings yet

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- MIMODocument4 pagesMIMOvahdat_kazemiNo ratings yet

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (894)

- Data Transmition MethodDocument4 pagesData Transmition Methodvahdat_kazemiNo ratings yet

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Inductive MIMODocument9 pagesInductive MIMOvahdat_kazemiNo ratings yet

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- Analysis On Algorithm of Wavelet Transform and Its Realization in C LanguageDocument3 pagesAnalysis On Algorithm of Wavelet Transform and Its Realization in C Languagevahdat_kazemiNo ratings yet

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- Multi Focus Image Fusion Using ModifiedDocument9 pagesMulti Focus Image Fusion Using Modifiedvahdat_kazemiNo ratings yet

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- Lecture - Bilateral FilterDocument19 pagesLecture - Bilateral Filtervahdat_kazemiNo ratings yet

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (587)

- Renormalization Made Easy, BaezDocument11 pagesRenormalization Made Easy, BaezdbranetensionNo ratings yet

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (265)

- Copper Oxidation LabDocument3 pagesCopper Oxidation Labapi-348321624No ratings yet

- Table of Common Laplace TransformsDocument2 pagesTable of Common Laplace TransformsJohn Carlo SacramentoNo ratings yet

- Fisika Zat Padat I: Dosen: Dr. Iwantono, M.Phil Jurusan Fisika Fmipa-UrDocument78 pagesFisika Zat Padat I: Dosen: Dr. Iwantono, M.Phil Jurusan Fisika Fmipa-UrMailestari Wina YanceNo ratings yet

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- New Wheel Model Simulates Vehicle Dynamics at StandstillDocument4 pagesNew Wheel Model Simulates Vehicle Dynamics at StandstillLuca MidaliNo ratings yet

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (73)

- Statistical Non Parametric Mapping ManualDocument47 pagesStatistical Non Parametric Mapping ManualKrishna P. MiyapuramNo ratings yet

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- HAL An Approach Attack To Goldbach ConjectureDocument23 pagesHAL An Approach Attack To Goldbach ConjectureEMMANUEL AUDIGÉNo ratings yet

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- ATOA CAE Multiphysics and Multimaterial Design With COMSOL Webinar PDocument31 pagesATOA CAE Multiphysics and Multimaterial Design With COMSOL Webinar PRaj C ThiagarajanNo ratings yet

- Unit 4 DeadlocksDocument14 pagesUnit 4 DeadlocksMairos Kunze BongaNo ratings yet

- PID Controller Explained for Control SystemsDocument15 pagesPID Controller Explained for Control SystemsBaba YagaNo ratings yet

- MCQDocument6 pagesMCQShamara RodrigoNo ratings yet

- CV Iqbal Wahyu SaputraDocument1 pageCV Iqbal Wahyu SaputraIqbal WahyuNo ratings yet

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- Chapter 2Document48 pagesChapter 2lianne lizardoNo ratings yet

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2219)

- 4th Periodical Test in Math 5-NewDocument9 pages4th Periodical Test in Math 5-NewMitchz Trinos100% (2)

- Assignment 1 - Simple Harmonic MotionDocument2 pagesAssignment 1 - Simple Harmonic MotionDr. Pradeep Kumar SharmaNo ratings yet

- Etabs NotesDocument11 pagesEtabs Noteskarimunnisa sheikNo ratings yet

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- No SystemDocument15 pagesNo SystemAjeet Singh RachhoyaNo ratings yet

- Hindu Temple Fractals - Vastu N Carl JungDocument31 pagesHindu Temple Fractals - Vastu N Carl JungDisha TNo ratings yet

- Lab Report FormatDocument2 pagesLab Report Formatsgupta792No ratings yet

- Cereals and Pulses - Specs & Test Methods Part-1 RiceDocument43 pagesCereals and Pulses - Specs & Test Methods Part-1 RiceGhulam MustafaNo ratings yet

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (119)

- Chapter 13 Test BankDocument44 pagesChapter 13 Test BankMIKASANo ratings yet

- Material Models in PlaxisDocument136 pagesMaterial Models in PlaxismpvfolloscoNo ratings yet

- Decimal and Fraction Concepts for Fourth GradersDocument6 pagesDecimal and Fraction Concepts for Fourth GradersSharmaine VenturaNo ratings yet

- FE DP1 Math AASL P1 SolDocument10 pagesFE DP1 Math AASL P1 SolJustin Clement TjiaNo ratings yet

- Monthly Reference 5Document22 pagesMonthly Reference 5Nurul AbrarNo ratings yet

- 1e1: Engineering Mathematics I (5 Credits) LecturerDocument2 pages1e1: Engineering Mathematics I (5 Credits) LecturerlyonsvNo ratings yet

- Solid State PhysicsDocument281 pagesSolid State PhysicsChang Jae LeeNo ratings yet

- RF Energy Harvesting in Relay NetworksDocument44 pagesRF Energy Harvesting in Relay NetworksYeshwanthSuraNo ratings yet

- SPUD 604 ManualDocument37 pagesSPUD 604 ManualfbarrazaisaNo ratings yet

- Water Refilling Station FeasibDocument10 pagesWater Refilling Station FeasibOman OpredoNo ratings yet

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)